Neuroscience

R2D3 recently had a fantastic Visual Introduction to Machine Learning, using the classification of homes in San Francisco vs. New York as their example. As they explain quite simply:

Computational neuroscience types are using machine learning algorithms to classify all sorts of brain states, and diagnose brain disorders, in humans. How accurate are these classifications? Do the studies all use separate training sets and test sets, as shown in the example above?

Let's say your fMRI measure is able to differentiate individuals with panic disorder (n=33) from those with panic disorder + depression (n=26) with 79% accuracy.1 Or with structural MRI scans you can distinguish 20 participants with treatment-refractory depression from 21 never-depressed individuals with 85% accuracy.2 Besides the issues outlined in the footnotes, the “reality check” is that the model must be able to predict group membership for a new (untrained) data set. And most studies don't seem to do this.

I was originally drawn to the topic by a 3 page article entitled, Machine learning algorithm accurately detects fMRI signature of vulnerability to major depression (Sato et al., 2015). Wow! Really? How accurate? Which fMRI signature? Let's take a look.

Nor did they try to compare individuals who are currently depressed to those who are currently remitted. That didn't matter, apparently, because the authors suggest the fMRI signature is a trait marker of vulnerability, not a state marker of current mood. But the classifier missed 28% of the remitted group who did not have the “guilt-selective anterior temporal functional connectivity changes.”

What is that, you ask? This is a set of mini-regions (i.e., not too many voxels in each) functionally connected to a right superior anterior temporal lobe seed region of interest during a contrast of guilt vs. anger feelings (selected from a number of other possible emotions) for self or best friend, based on written imaginary scenarios like “Angela [self] does act stingily towards Rachel [friend]” and “Rachel does act stingily towards Angela” conducted outside the scanner (after the fMRI session is over). Got that?

You really need to read a bunch of other articles to understand what that means, because the current paper is less than 3 pages long. Did I say that already?

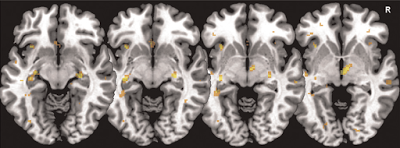

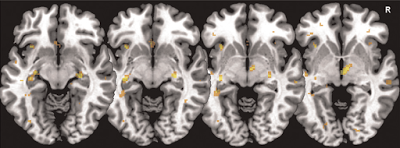

modified from Fig 1B (Sato et al., 2015). Weight vector maps highlighting voxels among the 1% most discriminative for remitted major depression vs. controls, including the subgenual cingulate cortex, both hippocampi, the right thalamus and the anterior insulae.

modified from Fig 1B (Sato et al., 2015). Weight vector maps highlighting voxels among the 1% most discriminative for remitted major depression vs. controls, including the subgenual cingulate cortex, both hippocampi, the right thalamus and the anterior insulae.

The patients were previously diagnosed according to DSM-IV-TR (which was current at the time), and in remission for at least 12 months. The study was conducted by investigators from Brazil and the UK, so they didn't have to worry about RDoC, i.e. “new ways of classifying mental disorders based on behavioral dimensions and neurobiological measures” (instead of DSM-5 criteria). A “guilt-proneness” behavioral construct, along with the “guilt-selective” network of idiosyncratic brain regions, might be more in line with RDoC than past major depression diagnosis.

Could these results possibly generalize to other populations of remitted and never-depressed individuals? Well, the fMRI signature seems a bit specialized (and convoluted). And overfitting is another likely problem here...

In their next post, R2D3 will discuss overfitting:

In my next post, I'll present an unsystematic review of machine learning as applied to the classification of major depression. It's notable that Sato et al. (2015) used the word “classification” instead of “diagnosis.”3

ADDENDUM (Aug 3 2015): In the comments, I've presented more specific critiques of: (1) the leave-one-out procedure and (2) how the biomarker is temporally disconnected from when the participants identify their feeling as 'guilt' or 'anger' or etc. (and why shame is more closely related to depression than guilt).

Footnotes

1 The sensitivity (true positive rate) was 73% and the specificity (true negative rate) was 85%. After correcting for confounding variables, these numbers were 77% and 70%, respectively.

2 The abstract concludes this is a “high degree of accuracy.” Not to pick on these particular authors (this is a typical study), but Dr. Dorothy Bishop explains why this is not very helpful for screening or diagnostic purposes. And what you'd really want to do here is to discriminate between treatment-resistant vs. treatment-responsive depression. If an individual does not respond to standard treatments, it would be highly beneficial to avoid a long futile period of medication trials.

3 In case you're wondering, the title of this post was based on The Dark Side of Diagnosis by Brain Scan, which is about Dr Daniel Amen. The work of the investigators discussed here is in no way, shape, or form related to any of the issues discussed in that post.

Reference

Sato, J., Moll, J., Green, S., Deakin, J., Thomaz, C., & Zahn, R. (2015). Machine learning algorithm accurately detects fMRI signature of vulnerability to major depression Psychiatry Research: Neuroimaging DOI: 10.1016/j.pscychresns.2015.07.001

- A New Biomarker For Treatment Response In Major Depression? Not Yet.

Is a laboratory test or brain scanning method for diagnosing psychiatric disorders right around the corner? How about a test to choose the best method of treatment? Many labs around the world are working to solve these problems, but we don't yet...

- Can You Read My Mind?

On the corner of main street Just tryin' to keep it in line You say you wanna move on and instead of falling behind Can you read my mind? Can you read my mind? Read My Mind ------The Killers A recent study in PLoS One (Shinkareva et al., 2008)...

- Hippocampocingulotastic Mashup

Hippocampal Neurogenesis v Area 25 OR, Is Hippocampal Neurogenesis Really Responsible for Antidepressant Treatment Response? [Maybe it's just a convenient marker of neural plasticity...] How do we resolve the differences between rodent studies that...

- The Sad Cingulate Before Cbt

The latest sad cingulate news is an fMRI study that examined the responsiveness of this region (subgenual cingulate cortex, aka Brodmann area 25) to emotional stimuli as a predictor of recovery in depressed patients receiving cognitive behavior therapy...

- Neuropsychology Abstract Of The Day: Aphasia And The Assessment Of Depression

Townend E, Brady M, McLaughlan K. A Systematic Evaluation of the Adaptation of Depression Diagnostic Methods for Stroke Survivors Who Have Aphasia. Stroke. 2007 Oct 11; [Epub ahead of print] From the NMAHP Research Unit, Buchannan House, Glasgow Caledonian...

Neuroscience

The Idiosyncratic Side of Diagnosis by Brain Scan and Machine Learning

R2D3

R2D3 recently had a fantastic Visual Introduction to Machine Learning, using the classification of homes in San Francisco vs. New York as their example. As they explain quite simply:

In machine learning, computers apply statistical learning techniques to automatically identify patterns in data. These techniques can be used to make highly accurate predictions.You should really head over there right now to view it, because it's very impressive.

Computational neuroscience types are using machine learning algorithms to classify all sorts of brain states, and diagnose brain disorders, in humans. How accurate are these classifications? Do the studies all use separate training sets and test sets, as shown in the example above?

Let's say your fMRI measure is able to differentiate individuals with panic disorder (n=33) from those with panic disorder + depression (n=26) with 79% accuracy.1 Or with structural MRI scans you can distinguish 20 participants with treatment-refractory depression from 21 never-depressed individuals with 85% accuracy.2 Besides the issues outlined in the footnotes, the “reality check” is that the model must be able to predict group membership for a new (untrained) data set. And most studies don't seem to do this.

I was originally drawn to the topic by a 3 page article entitled, Machine learning algorithm accurately detects fMRI signature of vulnerability to major depression (Sato et al., 2015). Wow! Really? How accurate? Which fMRI signature? Let's take a look.

- machine learning algorithm = Maximum Entropy Linear Discriminant Analysis (MLDA)

- accurately predicts = 78.3% (72.0% sensitivity and 85.7% specificity)

- fMRI signature = “guilt-selective anterior temporal functional connectivity changes” (seems a bit overly specific and esoteric, no?)

- vulnerability to major depression = 25 participants with remitted depression vs. 21 never-depressed participants

Nor did they try to compare individuals who are currently depressed to those who are currently remitted. That didn't matter, apparently, because the authors suggest the fMRI signature is a trait marker of vulnerability, not a state marker of current mood. But the classifier missed 28% of the remitted group who did not have the “guilt-selective anterior temporal functional connectivity changes.”

What is that, you ask? This is a set of mini-regions (i.e., not too many voxels in each) functionally connected to a right superior anterior temporal lobe seed region of interest during a contrast of guilt vs. anger feelings (selected from a number of other possible emotions) for self or best friend, based on written imaginary scenarios like “Angela [self] does act stingily towards Rachel [friend]” and “Rachel does act stingily towards Angela” conducted outside the scanner (after the fMRI session is over). Got that?

You really need to read a bunch of other articles to understand what that means, because the current paper is less than 3 pages long. Did I say that already?

The patients were previously diagnosed according to DSM-IV-TR (which was current at the time), and in remission for at least 12 months. The study was conducted by investigators from Brazil and the UK, so they didn't have to worry about RDoC, i.e. “new ways of classifying mental disorders based on behavioral dimensions and neurobiological measures” (instead of DSM-5 criteria). A “guilt-proneness” behavioral construct, along with the “guilt-selective” network of idiosyncratic brain regions, might be more in line with RDoC than past major depression diagnosis.

Could these results possibly generalize to other populations of remitted and never-depressed individuals? Well, the fMRI signature seems a bit specialized (and convoluted). And overfitting is another likely problem here...

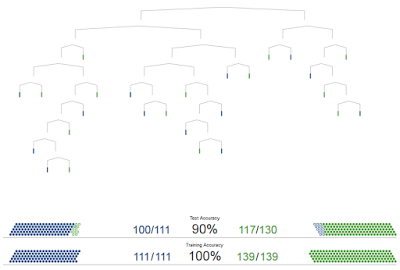

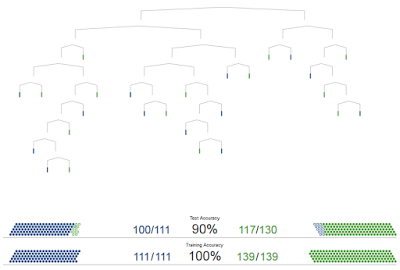

In their next post, R2D3 will discuss overfitting:

Ideally, the [decision] tree should perform similarly on both known and unknown data.

So this one is less than ideal. [NOTE: the one that's 90% in the top figure]

These errors are due to overfitting. Our model has learned to treat every detail in the training data as important, even details that turned out to be irrelevant.

In my next post, I'll present an unsystematic review of machine learning as applied to the classification of major depression. It's notable that Sato et al. (2015) used the word “classification” instead of “diagnosis.”3

ADDENDUM (Aug 3 2015): In the comments, I've presented more specific critiques of: (1) the leave-one-out procedure and (2) how the biomarker is temporally disconnected from when the participants identify their feeling as 'guilt' or 'anger' or etc. (and why shame is more closely related to depression than guilt).

Footnotes

1 The sensitivity (true positive rate) was 73% and the specificity (true negative rate) was 85%. After correcting for confounding variables, these numbers were 77% and 70%, respectively.

2 The abstract concludes this is a “high degree of accuracy.” Not to pick on these particular authors (this is a typical study), but Dr. Dorothy Bishop explains why this is not very helpful for screening or diagnostic purposes. And what you'd really want to do here is to discriminate between treatment-resistant vs. treatment-responsive depression. If an individual does not respond to standard treatments, it would be highly beneficial to avoid a long futile period of medication trials.

3 In case you're wondering, the title of this post was based on The Dark Side of Diagnosis by Brain Scan, which is about Dr Daniel Amen. The work of the investigators discussed here is in no way, shape, or form related to any of the issues discussed in that post.

Reference

Sato, J., Moll, J., Green, S., Deakin, J., Thomaz, C., & Zahn, R. (2015). Machine learning algorithm accurately detects fMRI signature of vulnerability to major depression Psychiatry Research: Neuroimaging DOI: 10.1016/j.pscychresns.2015.07.001

- A New Biomarker For Treatment Response In Major Depression? Not Yet.

Is a laboratory test or brain scanning method for diagnosing psychiatric disorders right around the corner? How about a test to choose the best method of treatment? Many labs around the world are working to solve these problems, but we don't yet...

- Can You Read My Mind?

On the corner of main street Just tryin' to keep it in line You say you wanna move on and instead of falling behind Can you read my mind? Can you read my mind? Read My Mind ------The Killers A recent study in PLoS One (Shinkareva et al., 2008)...

- Hippocampocingulotastic Mashup

Hippocampal Neurogenesis v Area 25 OR, Is Hippocampal Neurogenesis Really Responsible for Antidepressant Treatment Response? [Maybe it's just a convenient marker of neural plasticity...] How do we resolve the differences between rodent studies that...

- The Sad Cingulate Before Cbt

The latest sad cingulate news is an fMRI study that examined the responsiveness of this region (subgenual cingulate cortex, aka Brodmann area 25) to emotional stimuli as a predictor of recovery in depressed patients receiving cognitive behavior therapy...

- Neuropsychology Abstract Of The Day: Aphasia And The Assessment Of Depression

Townend E, Brady M, McLaughlan K. A Systematic Evaluation of the Adaptation of Depression Diagnostic Methods for Stroke Survivors Who Have Aphasia. Stroke. 2007 Oct 11; [Epub ahead of print] From the NMAHP Research Unit, Buchannan House, Glasgow Caledonian...