Neuroscience

Several interesting blog posts, discussions on Twitter and regular media articles (e.g. Times Higher Education) have recently focused on the role of negative (so-called null) findings and the publish or perish culture.

In his blog, Pete gives the following description of how we generally think that null findings influence the scientific process

More recently Fanelli (2010) confirmed the earlier reports about psychology/psychiatry being especially prone to the bias of publishing positive findings. Table 2 below outlines the probability for a paper to report positive results in various disciplines. It is evident that, compared to every other discipline, Psychology fares the worst - being five times more likely as the baseline (space science) to publish positive results! We might ask 'why' psychology? and what effect does it have?

The File Drawer effect and Fail-Safes

Obviously meta-analysis is based on quantitatively summarising the findings that are accessible (tending to be those published of course). This raises the so-called file-drawer effect, whereby negative studies may be tucked away in a file drawer because they are viewed as less publishable. It is possible in meta analysis to statistically estimate the file-drawer effect - the original and still widely used method is the Fail-Safe statistic devised by Orwin, which essentially estimates how many unpublished negative studies would be need to overturn a significant effect size in a meta analysis. A marginal effect size may require just one or two unpublished negative studies to overturn it, while a strong effect may require thousands of unpublished negative studies to eliminate the effect.

So, at least we have a method for estimating the potential influence of negative unpublished studies.

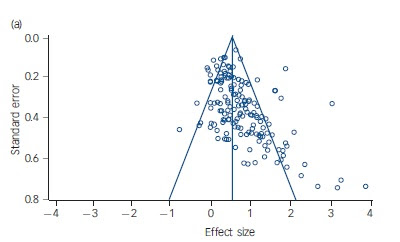

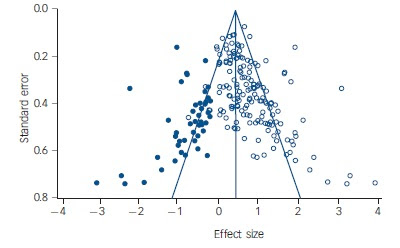

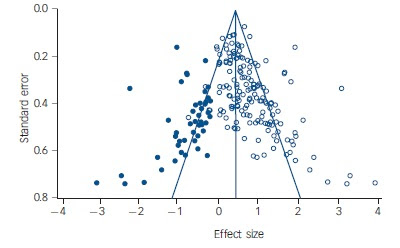

A standard way to look for bias in a meta analysis is to examine funnel plots of the individual study effect sizes plotted against their sample sizes or the standard errors. When no bias exists, studies with smaller error and larger sample sizes cluster around the mean effect size. By contrast, smaller samples and greater error variance produce far more variable effect sizes (in the tails). Ideally, we should observe a nicely symmetrical inverted funnel shape.

Turning to Cuijpers paper, the first figure below is clearly asymmetrical, showing a lack of negative findings (left side). Statistical techniques now exist for imputing or filling in these assumed missing values (see figure below where this has been done). The lower funnel plot gives a more realistic picture and adjusts the overall effect size downwards as a consequence (Cuijpers imputed 51 missing negative findings - the dark circles) - which reduced the effect size considerably from 0.67 to 0.42.

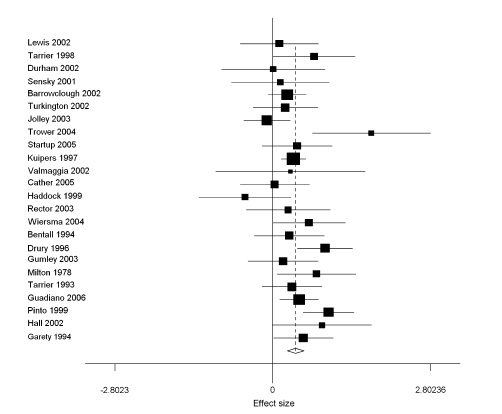

Although some may disagree, one area that I think I know a fair bit about is the use of CBT for psychosis. The following forest plot in Figure 2 is taken from a meta analysis of CBT for psychosis from Wykes et al (2008).

In meta analysis, forest plots are often one of the most informative sources of information - because they reveal much about the individuals studies. This example shows 24 published studies. The crucial information here, however, concerns not the magnitude of individual effect sizes (where the rectangle sits on the X axis), but

...the confidence intervals - these tell us everything!

When the confidence intervals pass through zero, we know the effect size was nonsigificant. So, looking at this forest plot only 6/24 (25%) studies show clear evidence of being significant trials(Trower 2004; Kuipers 1997; Drury 1997; Milton 1978; Guadiano 2006; Pinto 1999). Although only one quarter of all trials were clearly significant, the overall effect is significant (around 0.4 as indicated by the diamond at the foot of the figure)

In other words, it is quite possible for a vast majority of negative (null) findings to produce an overall significant effect size - surprising? Other examples exist (e.g. streptokinase: Lau et al 1992; for a recent example, see Rerkasem & Rothwell (2010) and indeed, I referred to one in my recent blog "What's your Poison?" on the meta analysis assessing LSD as a treatment for alcoholism (where no individual study was significant!).

Some argue that the negative studies are only negative because they are underpowered - however, this only seems likely with a moderate-large effect size that produces a nonsignificant statistical result. And further speculate that a large trial will prove the effectiveness of the treatment' however, when treatments have subsequently been evaluated in definitive large trials, they have often failed to reach significance. Egger and colleagues have written extensively on the unreliability of conclusions in meta-analyses where small numbers of nonsignificant trials are pooled to produce significant effects (Egger & Davey Smith. 1995)

So, negative or null findings are perhaps more and less worrisome than we may think. Its not just an issue of not publishing negative results in psychology, its also an issue of what to do with them when we have them

Keith R Laws (2012). Negativland: what to do about negative findings http://keithsneuroblog.blogspot.co.uk/2012/07/negativland-what-to-do-about-negative.html

- Serious Power Failure Threatens The Entire Field Of Neuroscience

Psychology has had a torrid time of late, with fraud scandals and question marks about the replicability of many of the discipline's key findings. Today it is joined in the dock by its more biologically oriented sibling: Neuroscience....

- Meta-matic: Meta-analyses Of Cbt For Psychosis

Meta analyses are not a 'ready-to-eat' dish that necessarily satisfy our desire for 'knowledge' - they require as much inspection as any primary data paper and indeed, afford closer inspection...as we have access to all of the data....

- Blinded By Science

"The New Year starts with a test of an established tenet of treatment in schizophrenia." British Journal of Psychiatry 'Highlights' January 2014 Its not often that we hear such phrases, but thus opens the 'highlights' section of the...

- 'representative' - Its Too "too Too" To Put A Finger On

Prove it... just the facts... the confidentialThis case, this case, this case that I...I've been workin' on so long...Chirpchirp...the birds they're giving you the words The world is just a feeling you undertook. Now the rose, it slowsyou...

- Whats Your Poison - Lsd Vs Alcohol

"A single dose of LSD, in the context of various alcoholism treatment programs, is associated with a decrease in alcohol misuse" This was the remarkable conclusion from a meta-analysisjust published by Krebs & Johansen (of the Norwegian University...

Neuroscience

Negativland: What to do about negative findings?

Elephant in the Room (by Banksy)

This violent bias of classical procedures [against the null hypothesis] is not an unmitigated disaster. Many null hypotheses tested by classical procedures are scientifically preposterous, not worthy of a moment's credence even as approximations. If a hypothesis is preposterous to start with, no amount of bias against it can be too great. On the other hand, if it is preposterous to start with, why test it? Ward Edwards, Psychological Bulletin (1965)

Several interesting blog posts, discussions on Twitter and regular media articles (e.g. Times Higher Education) have recently focused on the role of negative (so-called null) findings and the publish or perish culture.

In his blog, Pete gives the following description of how we generally think that null findings influence the scientific process

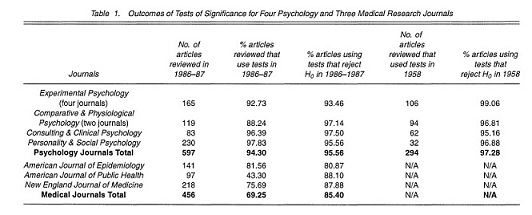

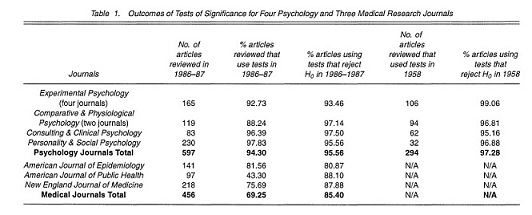

...if you run a perfectly good, well-designed experiment, but your analysis comes up with a null result, you're much less likely to get it published, or even actually submit it for publication. This is bad, because it means that the total body of research that does get published on a particular topic might be completely unrepresentative of what's actually going on. It can be a particular issue for medical science - say, for example, I run a trial for a new behavioural therapy that's supposed to completely cure anxiety. My design is perfectly robust, but my results suggest that the therapy doesn't work. That's a bit boring, and I don't think it will get published anywhere that's considered prestigious, so I don't bother writing it up; the results just get stashed away in my lab, and maybe I'll come back to it in a few years. But what if labs in other institutions run the same experiment? They don't know I've already done it, so they just carry on with it. Most of them find what I found, and again don't bother to publish their results - it's a waste of time. Except a couple of labs did find that the therapy works. They report their experiments, and now it looks like we have good evidence for a new and effective anxiety therapy, despite the large body of (unpublished) evidence to the contrary.The hand-wringing about negative or null findings is not new...and worryingly, psychology fares worse than most other disciplines, has done for a long time and (aside from hand-wringing) does little to change this situation. For example, see Greenwald's 'Consequences of the Prejudice against the Null Hypothesis' published in Psychological Bulletin in 1975. The table below comes from Sterling et al (1995) showing that <0.2% of papers in this sample accepted the null hypothesis (compare to a sample of medical journals below)

Table 1. From Sterling et al 1995

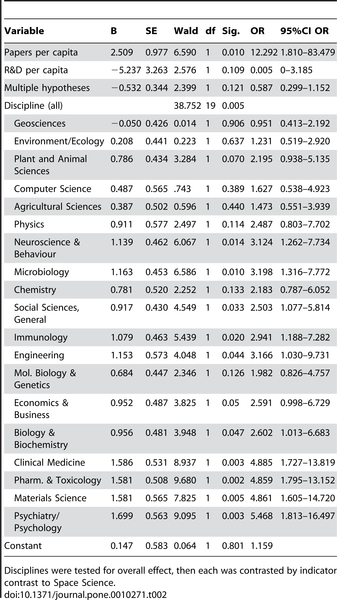

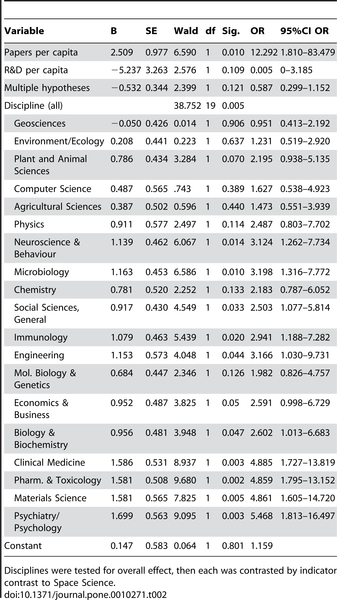

More recently Fanelli (2010) confirmed the earlier reports about psychology/psychiatry being especially prone to the bias of publishing positive findings. Table 2 below outlines the probability for a paper to report positive results in various disciplines. It is evident that, compared to every other discipline, Psychology fares the worst - being five times more likely as the baseline (space science) to publish positive results! We might ask 'why' psychology? and what effect does it have?

Table 2 Psychology/Psychiatry bottom of the league

Issues from Meta-Analysis

It is certainly my experience that negative findings are more commonly published in more medically oriented journals. In this context, the use of meta analysis becomes very interesting.The File Drawer effect and Fail-Safes

Obviously meta-analysis is based on quantitatively summarising the findings that are accessible (tending to be those published of course). This raises the so-called file-drawer effect, whereby negative studies may be tucked away in a file drawer because they are viewed as less publishable. It is possible in meta analysis to statistically estimate the file-drawer effect - the original and still widely used method is the Fail-Safe statistic devised by Orwin, which essentially estimates how many unpublished negative studies would be need to overturn a significant effect size in a meta analysis. A marginal effect size may require just one or two unpublished negative studies to overturn it, while a strong effect may require thousands of unpublished negative studies to eliminate the effect.

So, at least we have a method for estimating the potential influence of negative unpublished studies.

Where wild psychologists roam - Negativland by Neu!

Funnel Plots: imputing missing negative findings

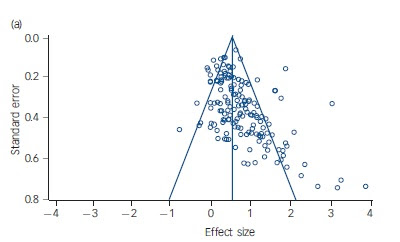

Related to the first point, we may also estimate the number of missing effect sizes and even how large they might be. Crucially, we can then impute the missing values to see how it changes the overall effect size in a meta-analysis. This was recently spotlighted by Cuijpers et al (2010) in their timely meta-analysis of psychological treatments for depression, which highlighted a strong bias toward positive reporting.A standard way to look for bias in a meta analysis is to examine funnel plots of the individual study effect sizes plotted against their sample sizes or the standard errors. When no bias exists, studies with smaller error and larger sample sizes cluster around the mean effect size. By contrast, smaller samples and greater error variance produce far more variable effect sizes (in the tails). Ideally, we should observe a nicely symmetrical inverted funnel shape.

Turning to Cuijpers paper, the first figure below is clearly asymmetrical, showing a lack of negative findings (left side). Statistical techniques now exist for imputing or filling in these assumed missing values (see figure below where this has been done). The lower funnel plot gives a more realistic picture and adjusts the overall effect size downwards as a consequence (Cuijpers imputed 51 missing negative findings - the dark circles) - which reduced the effect size considerably from 0.67 to 0.42.

Figure 1. Before and After Science: Funnel Plots from Cuipjers et al (2010)

No One Receiving (Brian Eno - from Before & After Science)

Question "What do you call a group of negative (null) findings?" Answer: "A positive effect"

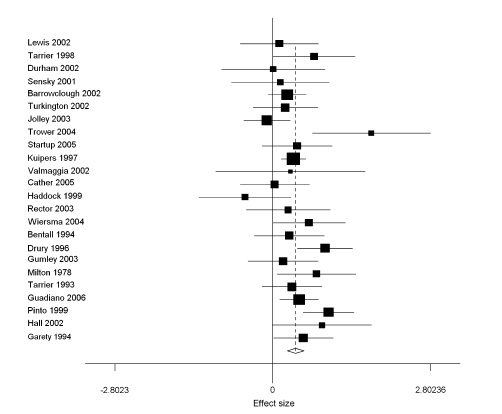

As noted already, some more medically-oriented disciplines seem happier to publish null findings - but what precisely may be some of the implications of this - especially in meta analyses? Just publishing negative findings is not the end of the questioning!Although some may disagree, one area that I think I know a fair bit about is the use of CBT for psychosis. The following forest plot in Figure 2 is taken from a meta analysis of CBT for psychosis from Wykes et al (2008).

Figure 2. Forest plot displaying Effect sizes for CBT as treatment for Psychosis

(from Wykes et al 2008)

In meta analysis, forest plots are often one of the most informative sources of information - because they reveal much about the individuals studies. This example shows 24 published studies. The crucial information here, however, concerns not the magnitude of individual effect sizes (where the rectangle sits on the X axis), but

...the confidence intervals - these tell us everything!

When the confidence intervals pass through zero, we know the effect size was nonsigificant. So, looking at this forest plot only 6/24 (25%) studies show clear evidence of being significant trials(Trower 2004; Kuipers 1997; Drury 1997; Milton 1978; Guadiano 2006; Pinto 1999). Although only one quarter of all trials were clearly significant, the overall effect is significant (around 0.4 as indicated by the diamond at the foot of the figure)

In other words, it is quite possible for a vast majority of negative (null) findings to produce an overall significant effect size - surprising? Other examples exist (e.g. streptokinase: Lau et al 1992; for a recent example, see Rerkasem & Rothwell (2010) and indeed, I referred to one in my recent blog "What's your Poison?" on the meta analysis assessing LSD as a treatment for alcoholism (where no individual study was significant!).

Some argue that the negative studies are only negative because they are underpowered - however, this only seems likely with a moderate-large effect size that produces a nonsignificant statistical result. And further speculate that a large trial will prove the effectiveness of the treatment' however, when treatments have subsequently been evaluated in definitive large trials, they have often failed to reach significance. Egger and colleagues have written extensively on the unreliability of conclusions in meta-analyses where small numbers of nonsignificant trials are pooled to produce significant effects (Egger & Davey Smith. 1995)

So, negative or null findings are perhaps more and less worrisome than we may think. Its not just an issue of not publishing negative results in psychology, its also an issue of what to do with them when we have them

- Serious Power Failure Threatens The Entire Field Of Neuroscience

Psychology has had a torrid time of late, with fraud scandals and question marks about the replicability of many of the discipline's key findings. Today it is joined in the dock by its more biologically oriented sibling: Neuroscience....

- Meta-matic: Meta-analyses Of Cbt For Psychosis

Meta analyses are not a 'ready-to-eat' dish that necessarily satisfy our desire for 'knowledge' - they require as much inspection as any primary data paper and indeed, afford closer inspection...as we have access to all of the data....

- Blinded By Science

"The New Year starts with a test of an established tenet of treatment in schizophrenia." British Journal of Psychiatry 'Highlights' January 2014 Its not often that we hear such phrases, but thus opens the 'highlights' section of the...

- 'representative' - Its Too "too Too" To Put A Finger On

Prove it... just the facts... the confidentialThis case, this case, this case that I...I've been workin' on so long...Chirpchirp...the birds they're giving you the words The world is just a feeling you undertook. Now the rose, it slowsyou...

- Whats Your Poison - Lsd Vs Alcohol

"A single dose of LSD, in the context of various alcoholism treatment programs, is associated with a decrease in alcohol misuse" This was the remarkable conclusion from a meta-analysisjust published by Krebs & Johansen (of the Norwegian University...