Neuroscience

We like to break down large problems into smaller ones and look at the whole picture as a sum of its parts. The pieces of a jigsaw puzzle form an image, or different letters come together to form words. There are times when a larger picture isn't just a zero-sum games, the whole is greater than the sum of its constituents, or even when a chain is only as strong as its weakest link. No matter how you look at the bigger picture, there are different ways we can find a bigger thing among smaller parts.

The neural networks of the brain are one of those bigger things. Researchers at Google DeepMind have recently created a model of a neural programmer-interpreter, NPI, a type of compositional neural network using various forms of small and large networks in scales of complexities to store memory and execute functions optimally. NPI can train itself to perform tasks on small sets of elements that can be generalized to much larger sets with remarkable results. An example would be rotating the entirety of an image when you want a specific orientation by analyzing the location of a small set of the image's pixels. The NPI can use various algorithms for sorting, addition, and trajectory planning in different types of neural networks with significant accuracy. NPI performs these tasks through long short-term memory networks.

In what initially may sound like an oxymoron, long short-term memory, or LSTM, networks use long sequences of occurring loops. Each loop forms a list or a step in an overall network with each other. When designing software, these networks have been used for various practical purposes including speech recognition, translation, language understanding and other means.

What does this all means for learning? It simply means more efficiency for how computers can process new information. While we can use this for making cooler and better computers, there are still many barriers we face to understanding how the human mind works with such a technology. We talk a lot about how the brain is like a computer that can be programmed for various functions and tasks the same way we tell our laptops and phones to update a Facebook status or send a text. But the mind might not be so easily "computerized" after all. Before can dive into the implications NPIs or LSTMs have on our research of the brain, we need to understand how well we can even structure the brain as a computer to begin with.

Frances Egan, philosophy professor at Rutgers University, gave a talk at IU a few weeks ago about the Computational Theory of the Mind. In philosophy, this theory uses the mental processes of the mind as computational processes. A physical system computers just in case it implements a well-defined function. It's a mechanical account of thought that can be modeled, and it's physically realizable as well. We can model or simulate a thought, a network, or anything similarly if we know the science behind it. It's a very abstract approach to physical detail that can also map out physical states (or whatever state the neural network is in) into something mathematical.

Computational models seem like common sense. When you're navigating your house at night with the lights off, you probably have to rely on an inner sense to determine where you are. You may be a couple of feet from your dinner table or a few steps behind the television. In any case, you would probably "add" and "subtract" these distances relative to one another to figure out where you are in your house. Similarly, a vector addition of the underlying neural networks can give us our theories of the mind as well. An LSTM or NPI can use such a process in mapping out networks of the mind and working things from there. We can make predictions about future states and explain a lot of our mind through this theory.

Whatever the case may be, science and philosophy will always be at ends with one another in the unraveling of the mind, the brain, and everything in between.

- Expanding The Frontiers Of Human Cognition

Chris Chatham: "The goal of developmental cognitive neuroscience is to uncover those mechanisms of change which allow the mature mind to emerge from the brain. The term encompasses a wide spectrum of research with one common fundamental assumption: the...

- Scary Brains And The Garden Of Earthly Deep Dreams

In case you've been living under a rock the past few weeks, Google's foray into artificial neural networks has yielded hundreds of thousands of phantasmagoric images. The company has an obvious interest in image classification, and here's...

- Do Dynamic Models Explain? (#mechanismweek 3)

So far we have learned what a mechanism is, two ways of modelling mechanisms (functional and mechanistic) and we've identified that cognitive science is currently dominated entirely by functional models which will never actually turn into mechanistic...

- What's The Difference Between Perception And Conception?

As Andrew has been tackling a new job description for the brain (part 1 and part 2), several comments have been made that suggest that his approach (and the ecological stance in general) might be fine for perception/action, but not for other types of...

- A Journey Into Our Neural Code (and The Mind Itself)

8-bit brain credit to Mitch Bolton.The brain is cool, but it's also really confusing. Given how complex it is, can we develop a model of information from it? Often, when we study the brain, we get caught up in the middle of a lot of things, both...

Neuroscience

Mind-reading: understanding how the brain learns

|

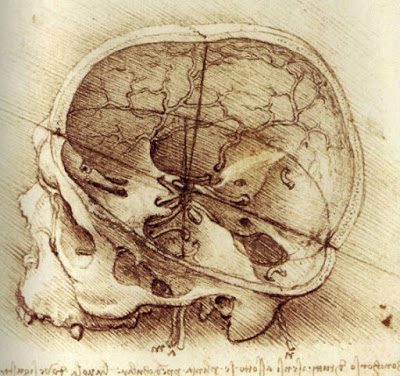

| A rendering from da Vinci's sketch. |

The neural networks of the brain are one of those bigger things. Researchers at Google DeepMind have recently created a model of a neural programmer-interpreter, NPI, a type of compositional neural network using various forms of small and large networks in scales of complexities to store memory and execute functions optimally. NPI can train itself to perform tasks on small sets of elements that can be generalized to much larger sets with remarkable results. An example would be rotating the entirety of an image when you want a specific orientation by analyzing the location of a small set of the image's pixels. The NPI can use various algorithms for sorting, addition, and trajectory planning in different types of neural networks with significant accuracy. NPI performs these tasks through long short-term memory networks.

|

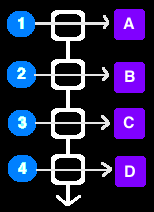

| In a simple example of an LSTM, different tasks (shown with numbers connected to letters) can be performed and concatenated with one another to create a network. These networks are often repetitive and can vary in the ways memory is transferred between each step. |

What does this all means for learning? It simply means more efficiency for how computers can process new information. While we can use this for making cooler and better computers, there are still many barriers we face to understanding how the human mind works with such a technology. We talk a lot about how the brain is like a computer that can be programmed for various functions and tasks the same way we tell our laptops and phones to update a Facebook status or send a text. But the mind might not be so easily "computerized" after all. Before can dive into the implications NPIs or LSTMs have on our research of the brain, we need to understand how well we can even structure the brain as a computer to begin with.

Frances Egan, philosophy professor at Rutgers University, gave a talk at IU a few weeks ago about the Computational Theory of the Mind. In philosophy, this theory uses the mental processes of the mind as computational processes. A physical system computers just in case it implements a well-defined function. It's a mechanical account of thought that can be modeled, and it's physically realizable as well. We can model or simulate a thought, a network, or anything similarly if we know the science behind it. It's a very abstract approach to physical detail that can also map out physical states (or whatever state the neural network is in) into something mathematical.

Computational models seem like common sense. When you're navigating your house at night with the lights off, you probably have to rely on an inner sense to determine where you are. You may be a couple of feet from your dinner table or a few steps behind the television. In any case, you would probably "add" and "subtract" these distances relative to one another to figure out where you are in your house. Similarly, a vector addition of the underlying neural networks can give us our theories of the mind as well. An LSTM or NPI can use such a process in mapping out networks of the mind and working things from there. We can make predictions about future states and explain a lot of our mind through this theory.

Whatever the case may be, science and philosophy will always be at ends with one another in the unraveling of the mind, the brain, and everything in between.

- Expanding The Frontiers Of Human Cognition

Chris Chatham: "The goal of developmental cognitive neuroscience is to uncover those mechanisms of change which allow the mature mind to emerge from the brain. The term encompasses a wide spectrum of research with one common fundamental assumption: the...

- Scary Brains And The Garden Of Earthly Deep Dreams

In case you've been living under a rock the past few weeks, Google's foray into artificial neural networks has yielded hundreds of thousands of phantasmagoric images. The company has an obvious interest in image classification, and here's...

- Do Dynamic Models Explain? (#mechanismweek 3)

So far we have learned what a mechanism is, two ways of modelling mechanisms (functional and mechanistic) and we've identified that cognitive science is currently dominated entirely by functional models which will never actually turn into mechanistic...

- What's The Difference Between Perception And Conception?

As Andrew has been tackling a new job description for the brain (part 1 and part 2), several comments have been made that suggest that his approach (and the ecological stance in general) might be fine for perception/action, but not for other types of...

- A Journey Into Our Neural Code (and The Mind Itself)

8-bit brain credit to Mitch Bolton.The brain is cool, but it's also really confusing. Given how complex it is, can we develop a model of information from it? Often, when we study the brain, we get caught up in the middle of a lot of things, both...